· 3 min read

300+ Billion Tokens and Counting -- The Path to our Kelvin Legal GPT Model

A domain-specific Legal Large Language Model

When we first started 273 Ventures late last year, some of you noticed the strange counter at the bottom of our site. This cryptically-increasing number tracked our data collection efforts, begun last December.

In August of this year, we finally made clear what this counter was by announcing the Kelvin Legal DataPack. This offering was the first large-scale dataset with clear IP provenance and commercial use rights in the market. At that time, I noted “high-quality data is the foundation of all large language models. Since late 2022, the team has been hard at work collecting, curating, and enriching/annotating the largest corpus of legal documents directly available for bulk download by the industry. It’s a proud moment for us, as we built the DataPack with our own Kelvin Legal Data OS, proving its extensive and scalable capabilities processing and enriching legal data.”

Since our August announcement, we have continued to collect and enrich our Kelvin Legal Data Pack, and we now have over 300 billion legal and financial tokens from nearly 100TB of content. This 300B+ token threshold was an important trigger for our team, because it was our internal threshold to begin a Kelvin Legal GPT Model.

One of our company values is that we believe in transparency. Not only is transparency the right thing to do, but current trends in public policy and litigation suggest that, at least for certain jurisdictions, transparency in AI models may soon be required. Thus, we have been building our company in public, including open source academic publications and software, extensive documentation and educational content through our 273 Ventures Blog and Kelvin.Legal Blog.

In the spirit of transparency, we have long communicated that we believed it was possible to build an accessible, foundational, legal large language model (LLM) that would match legal domain-specific performance of models such as GPT. This is an informed scientific conjecture, but not a guarantee. We were first inspired by Bloomberg’s 50B parameter model, released this spring; since then, Microsoft’s Phi models and Google’s Gemini Nano series, both small enough to run on laptops and phones, have demonstrated that this conjecture is almost certainly true.

We have already begun training this model - the Kelvin Legal Large Language Model (Kelvin LLLM), as we have named it. Customers are already using this family of models through the Kelvin embeddings and summarization models, available via API, ONNX, or within our chat experience and agent API.

Now, we are announcing our instruction-aligned Kelvin LLLM model, which will be released in beta in early 2024. This model will come in two sizes and will be available for local deployment as an internal API or through our chat experience and agent.

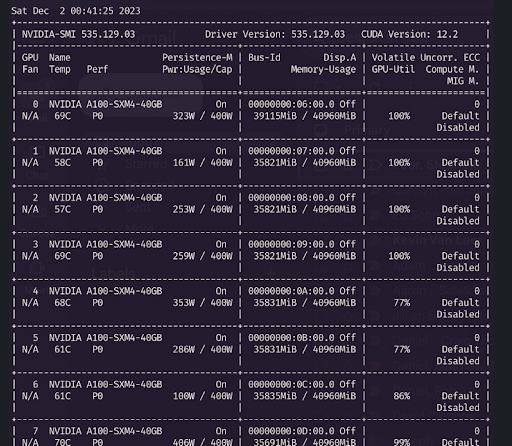

And yeah - there is nothing like some A100s to keep us warm during the winter :)

Michael Bommarito II

Mike is a Co-Founding Partner and CEO at 273 Ventures.

Mike is a serial entrepreneur and investor with over 20 years of experience in the financial, legal, and technology industries. He has worked with the world’s largest banks, governments, law firms, asset managers, technology companies, and reinsurers, spanning roles in strategy, technology, operations, and finance. Mike previously co-founded and successfully exited LexPredict, a legal A.I. company.

Would you like to learn more about Legal A.I.? Send your questions to Mike by email or LinkedIn.